Introduction

Definition of Kubernetes:

Kubernetes for DevOps is an open supply field orchestration platform designed to automate the deployment, scaling, and control of containerized applications. It plays a crucial role in DevOps practices by providing standardized packaging, efficient resource utilization, scalability, and high availability. Kubernetes integrates seamlessly into your DevOps pipeline and provides a consistent, declarative approach to application management.

Table of Contents

Importance of Kubernetes for DevOps

Kubernetes is an indispensable tool in the DevOps ecosystem, offering several key benefits:

- Container Orchestration: Automates the deployment and management of containerized applications, simplifying complex deployments across multiple environments.

- Resource Efficiency: Optimizes resource utilization dynamically, maximizing cost-effectiveness and reducing wastage.

- Scalability and High Availability: Provides built-in features for horizontal and vertical scaling, ensuring applications can handle varying demands and maintain high availability.

- Infrastructure Abstraction: Abstracts underlying infrastructure, enabling consistent deployment across different environments and cloud providers.

- CI/CD Integration: Seamlessly integrates with CI/CD tools, automating the build, test, and deployment processes for faster and more reliable software delivery.

- Service Discovery and Load Balancing: Offers built-in capabilities for service discovery and load balancing, facilitating seamless communication between services.

- Ecosystem and Community: Boasts a vibrant ecosystem and a strong community, providing a rich set of tools and best practices for enhanced application deployment and operations.

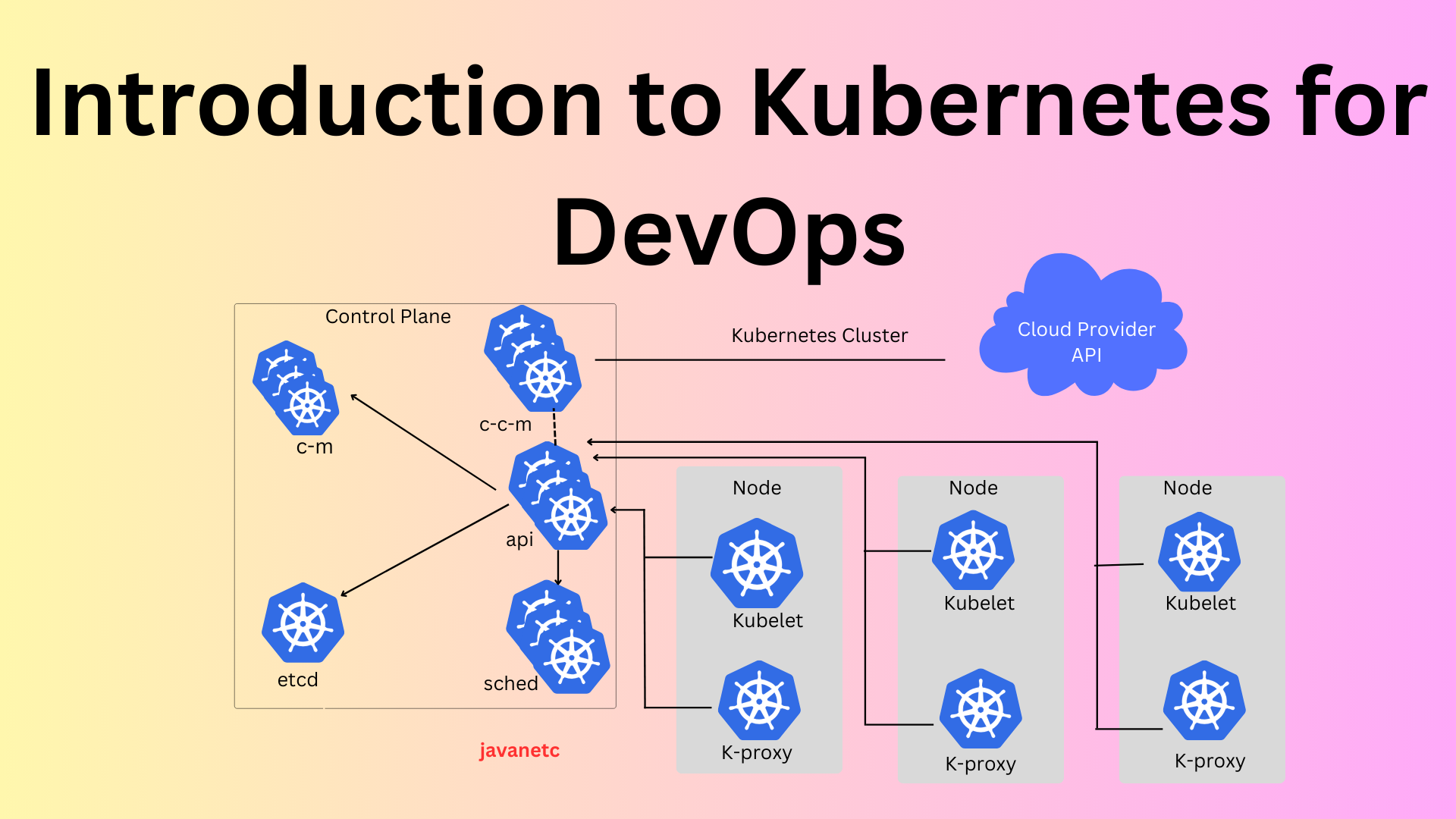

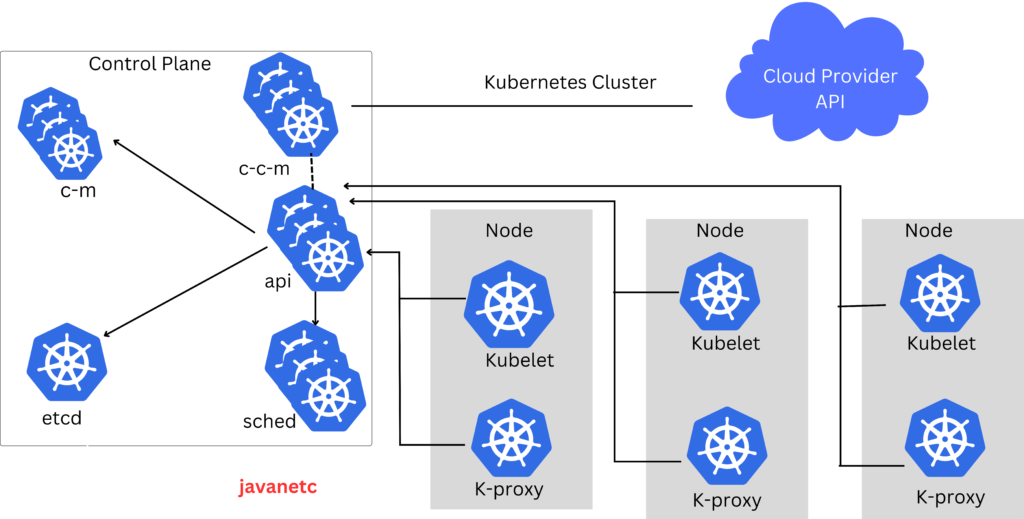

Kubernetes Architecture and Components

Understanding Kubernetes Architecture

Kubernetes follows a Master Worker Node Model, with components such as:

- Master(Control Plane) Node: Manages the overall state of the cluster and includes the API server, etcd (key-value store), controller manager, and scheduler.

- Worker Nodes: Execute containers, managed by components like Container Runtime, Kubelet, and kube-proxy.

- Pods: Basic units encapsulating one or more containers, sharing the same network namespace.

- Services: Expose pods to the network, providing stable IP addresses and load balancing.

- Volumes: Offer persistent storage to containers.

- Labels and Selectors: Attach metadata and filter resources based on labels.

- Controllers: Manage replication, scaling, and updates of pods.

- Add-Ons: Optional components for additional functionality.

Key Kubernetes Components and Their Roles

Control Plane:

- etcd: Stores cluster configuration data as a distributed key-value store.

- API Server: Exposes the Kubernetes API, processes requests, and updates the cluster state.

- Controller Manager: Manages controllers automating tasks in the cluster.

- Scheduler: Assigns nodes to pods based on policy.

Nodes:

- Container Runtime: Manages container lifecycle (e.g., Docker).

- Kubelet: Agent on nodes ensuring container health and reporting to the control plane.

- kube-proxy: Manages network communication within the cluster.

Containers:

- Lightweight, isolated runtime environments packaged with applications.

Networking:

- Facilitates communication between pods on different nodes.

Services:

- Expose pods to the network with stable IP addresses and load balancing.

Volumes:

- Provide persistent storage to containers.

Ingress:

- Exposes HTTP and HTTPS services externally.

Kubernetes API and Its Use in DevOps

Kubernetes API Usage in DevOps:

- Automate deployment, scaling, and updates: Utilize the API to automate various aspects of application lifecycle management.

- Define and manage Infrastructure as Code (IaC): Leverage the API to define and manage cluster configurations.

- Integrate with CI/CD pipelines: Automate the CI/CD process by interacting with the API for building, testing, and deploying containerized applications.

- Manage configuration settings: Utilize the API to define and update application configurations.

- Monitor and troubleshoot cluster state: Leverage the API for monitoring and troubleshooting by accessing cluster information.

- Manage resources optimally: Use the API to adjust resource allocations for optimal utilization.

- Enable rolling updates and rollbacks: Implement automated update strategies using the API.

- Support multi-cloud and hybrid deployments: Use the API for consistent management across different cloud providers and environments.

- Define custom resource definitions (CRDs): Extend functionality by defining custom resources through the API.

- Integrate with ecosystem tools: Leverage the API for seamless integration with various tools and platforms in the Kubernetes ecosystem.

Best Practices for Kubernetes in DevOps

Best Practices for Deploying Applications in Kubernetes

- Declarative Configuration: Use YAML or JSON files for reproducibility and ease of management.

- Container Images: Employ container images for consistent packaging and deployment.

- Namespace Isolation: Implement namespace isolation for access control and resource limits.

- Health Checks: Define readiness and liveness probes for application health checks.

- Rolling Updates: Minimize downtime during updates by employing rolling updates.

- Secrets Management: Securely manage sensitive information using Kubernetes secrets.

- Monitoring and Logging: Monitor and log applications for performance insights and issue troubleshooting.

- Backup and Restore: Regularly backup and restore application data for data integrity and reliability.

- Horizontal Pod Autoscaling (HPA): Dynamically adjust resources based on workload to optimize efficiency.

- Immutable Infrastructure: Treat containers as disposable, recreating them for updates instead of modifying them in place.

Scaling Applications in Kubernetes

- Horizontal Pod Autoscaling (HPA): Use HPA for automatic scaling based on metrics.

- Stateless Applications: Design applications to be stateless for seamless scaling and resiliency.

- Resource Allocation Optimization: Optimize resource allocation to prevent overprovisioning.

- ReplicaSets or Deployments: Utilize ReplicaSets or Deployments for managing desired replicas and enabling rolling updates.

- Vertical Scaling: Consider vertical scaling for resource-intensive applications.

- Affinity and Anti-Affinity Rules: Define rules for better load distribution and fault tolerance.

- Application-Level Scaling: Plan for scaling using distributed databases, caching, and queuing systems.

- Continuous Monitoring and Adjustment: Continuously monitor and adjust scaling parameters for optimal performance.

- Performance and Load Testing: Conduct performance and load testing to validate scalability.

- Failure Planning: Plan for failure and design applications to handle failures gracefully.

Monitoring and Logging in Kubernetes

- Native Monitoring Solutions: Utilize native monitoring tools like Prometheus and Grafana.

- Logs Collection and Analysis: Collect and analyze logs from containers, pods, and nodes for insights.

- Meaningful Metrics: Define meaningful metrics related to performance, resource utilization, and health.

- Alerting Based on Thresholds: Implement alerting based on defined thresholds for critical events or anomalies.

- Distributed Tracing Tools: Use distributed tracing tools like Jaeger or Zipkin to track requests across microservices.

- Custom Metrics: Define custom metrics for application-specific performance monitoring.

- Node Health Monitoring: Monitor node health, including CPU, memory, and disk usage.

- Dashboards for Visualization: Create dashboards for visualization of application and cluster health.

- Logging Best Practices: Follow logging best practices, such as using structured logs and avoiding logging sensitive information.

Kubernetes Security and Compliance Best Practices

- Role-Based Access Control (RBAC): Restrict permissions and limit access using RBAC.

- Network Policies: Enable network security with Network Policies to control traffic flow.

- Pod Security Policies (PSP): Enforce security policies for pods using PSP or admission controllers.

- Regular Updates: Keep Kubernetes components and containers up-to-date with the latest patches and security updates.

- Container Image Scanning: Identify and mitigate vulnerabilities in container images using scanning tools.

- Auditing and Logging: Enable auditing and logging to track and monitor activity within the cluster.

- Secrets Management: Securely manage sensitive information using Secrets or external secret management tools.

- Pod-Level Security Measures: Implement measures like running containers as non-root users and using read-only file systems.

- Configuration Review: Regularly review and audit cluster configurations to address potential security risks.

- Principle of Least Privilege: Only grant necessary permissions to prevent unauthorized access.

Use Cases for Kubernetes in DevOps

Microservices Deployment with Kubernetes

- Scalable and Cost-Effective: Kubernetes enables horizontal scaling and self-healing, reducing infrastructure costs.

- Collaboration with Cloud: Facilitates deployment across different cloud providers or on-premises environments.

- Service Discovery and Optimization: Simplifies development and management of microservices-based applications.

- Extensibility and Observability: Allows monitoring and tracing of microservices, with an extensive ecosystem for integration.

- Rapid Deployment and Agility: Enables faster deployment cycles and independent development of microservices.

CI/CD Pipelines with Kubernetes

- Automated Builds and Resilient Deployments: Supports automated building and deployment processes with resiliency features.

- Testing and Rolling Updates: Facilitates testing, rolling updates, and rollbacks for seamless application updates.

- Versioning and Tagging: Allows versioning and tagging of container images for tracking.

- Monitoring and Auditing: Provides built-in monitoring and observability features for effective CI/CD pipelines.

- Faster Deployments with Cloud: Cloud-agnostic nature enables faster deployments across different environments.

Hybrid Cloud Deployment with Kubernetes

- Hybrid Cloud: Facilitates migration and deployment across on-premises and cloud environments.

- Disaster Recovery and Backup: Enhances resilience through replication and disaster recovery strategies.

- Modernizing Applications: Supports modernization by containerizing applications and deploying at the edge.

- Extended Workflows: Extends DevOps workflows across different cloud environments for consistency.

- Resource Optimization and Mobility: Dynamically allocates workloads based on resource availability and mobility requirements.

Kubernetes for Infrastructure Automation

- Avoiding Failures: Automates deployment and management for large-scale infrastructure.

- Infrastructure Provisioning: Automates provisioning and scaling based on workload demands.

- Automated Monitoring and Updates: Automates updates, monitoring, and logging for efficient management.

- Managing and Scaling: Automates creation, management, and scaling of namespaces and applications.

- Security: Automates management of secrets, networking, and storage for improved security.

Challenges and Limitations of Kubernetes in DevOps

Common challenges for teams using Kubernetes in DevOps

- Learning Curve and Complexity: Kubernetes has a steep learning curve and complex ecosystem.

- Management and Networking: Properly managing resources and networking can be challenging.

- Storage and Scaling: Configuring and managing storage resources and scaling applications pose challenges.

- Updates and Upgrades: Managing updates and upgrades requires careful planning and testing.

- Monitoring and Troubleshooting: Identifying and resolving issues in deployments and monitoring/logging are challenging.

- Security Compliance: Ensuring compliance and security in Kubernetes deployments can be complex.

Limitations of Kubernetes and How to Work Around Them

- Scalability and Complexity: Invest in training, start with small steps, and utilize scaling best practices.

- Networking and Storage: Utilize Kubernetes service objects, implement network policies, and use dynamic provisioning for storage.

- Security: Follow Kubernetes security best practices and implement container image scanning tools.

- Upgrades and Logging: Plan upgrades carefully and implement centralized logging solutions.

- Vendor Lock-In and Portability: Adopt cloud-agnostic practices and use abstraction layers like Helm charts.

- Human Error: Implement automation, use Infrastructure as Code (IaC), and enforce strict change management processes.

Future Trends in Kubernetes for DevOps

- Increased Adoption of Multi-Cloud and Hybrid Cloud Deployments: Organizations will continue to leverage Kubernetes for flexible deployment strategies.

- Enhanced Security Measures: Security will remain a top priority, with advancements in Kubernetes security practices.

- Advanced Automation Capabilities: Automation will play a crucial role in streamlining application deployment and management processes.

- Improved Observability and Monitoring: Enhancements in monitoring and observability tools will provide better insights into cluster and application health.

- Increased Use of Kubernetes Operators: Operators will gain popularity for automating complex operational tasks.

- Integration with Emerging Technologies: Kubernetes will integrate with emerging technologies like machine learning and edge computing for enhanced capabilities.

Conclusion

Kubernetes serves as a cornerstone in DevOps practices, offering automation, scalability, and flexibility for deploying and managing containerized applications. Understanding Kubernetes architecture, components, and best practices is essential for successful adoption in DevOps workflows. Despite challenges, Kubernetes continues to evolve, driving innovation and enabling organizations to deliver software more efficiently and reliably in today’s dynamic IT landscape.